In Maine's medical field, artificial intelligence takes on a mountain of data

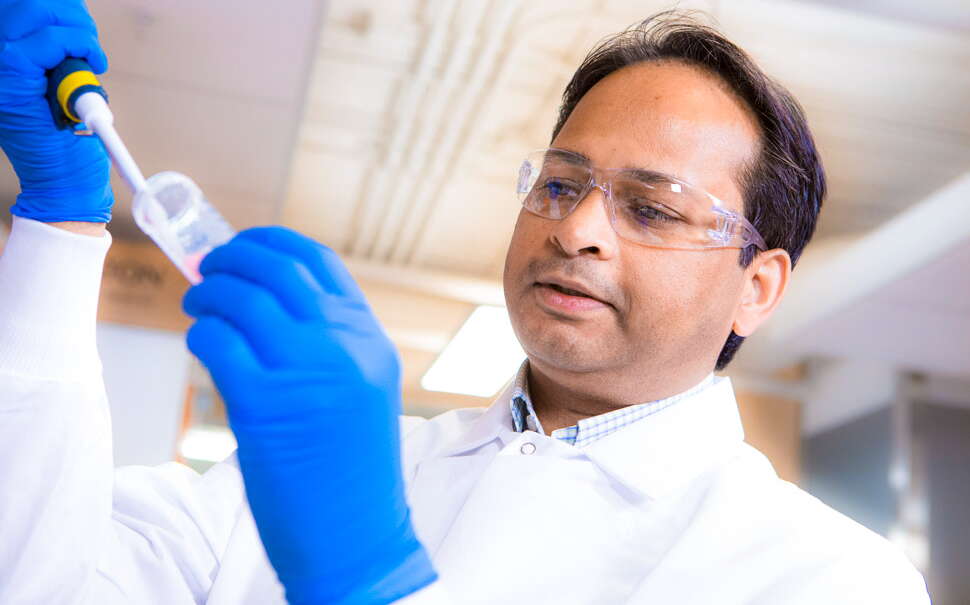

Photo / Tim Greenway

From left, MaineHealth Chief Academic Officer Doug Sawyer, Roux Institute data scientist Rai Winslow, MaineHealth project manager Felistas Mazhude and MaineHealth cardiac surgeon Bob Kramer in an intensive care unit room in the cardiac surgery intensive care unit at Maine Medical Center in Portland. The monitor behind them is among the equipment used in the AI project.

Photo / Tim Greenway

From left, MaineHealth Chief Academic Officer Doug Sawyer, Roux Institute data scientist Rai Winslow, MaineHealth project manager Felistas Mazhude and MaineHealth cardiac surgeon Bob Kramer in an intensive care unit room in the cardiac surgery intensive care unit at Maine Medical Center in Portland. The monitor behind them is among the equipment used in the AI project.

Hospitals and research labs are leveraging big health data to prevent, diagnose and treat disease, conduct early research and accelerate drug discovery.

According to a recent panel hosted by the Roux Institute at Northeastern University, artificial intelligence has the potential to transform Maine’s health care and life sciences sectors through use in clinical practice, in research and “translational medicine,” and in hospital operations — all involving massive amounts of data to compute on behalf of human health.

The life sciences community is adopting AI for its ability to drastically reduce the time needed to translate massive amounts of continuously generated data into treatments and therapies for clinical use.

Researchers are also careful to emphasize that clinical practice will never depend solely on AI-generated findings. There will always be humans in the mix who will use the findings as one more informational tool on which to base their practices.

“We’ll see a blossoming of AI and machine learning approaches in understanding the fundamental basis of disease and how to target cures,” says Raimond Winslow, director of life sciences and medical research at the Roux Institute in Portland.

Countless blips

The Roux Institute is working with MaineHealth on a project called HEART, for Health Care Enabled by AI in Real Time.

The project looks at how to better identify cardiac arrest patients at risk of serious complications and to prevent adverse events while they’re recovering from heart surgery.

“A lot of data is looked at all the time by clinicians but not necessarily captured in a way that can lead to the more sophisticated analysis that machine learning can do,” says Dr. Douglas Sawyer, HEART’s lead and Maine Health’s chief academic officer.

Typically, data generated by devices such as heart monitors are ephemeral. They appear as blips and are gone. The goal is to capture the countless blips generated by all of the devices used in a patient’s care and analyze patterns.

Teams from Northeastern University and MaineHealth began the project two years ago as an experiment using a limited number of retrospective data elements collected by hand from thousands of patients for decades. The retrospective sets were used to build a clinically useful AI-enabled prediction tool, which proved successful at the theoretical level.

The next phase launched in January with the ongoing collection of real-time data from MaineHealth heart surgery patients in the ICU. New medical equipment was acquired to allow all data elements, usually generated piecemeal, to be collected and stored in a cloud-based server. So far, data have been collected from hundreds of patients.

Finding patterns

“Usually some data is collected and saved for some period of time, but not beyond the patient’s hospital stay,” says Sawyer. “And then they go into the OR and that’s different data and it’s not integrated. We’re bringing it all together and asking, ‘Can we learn something about who might have a bad outcome or might be at a high risk for those outcomes?’”

A patient’s condition can change for the worse in a short period of time. Machine learning algorithms “find these patterns and amplify them, and turn them into a signal that’s sufficiently robust that the caregiver can say, ‘This patient is heading toward septic shock,’” for example, says Winslow. “Humans can’t do this, and they can’t do it every moment.”

If predictions can be done reliably, then caregivers will have the advanced warning needed to intervene and decide if and how to step in.

“And then, with early intervention, we’ll be able to reduce the rate of negative outcomes and help patents,” says Winslow.

Winslow and Sawyer emphasized that AI-enabled predictions are considered only as added information to caregivers, not as the final word.

“Physicians are always going to be at the tip of the spear, holding the fundamental responsibility for helping patients and families make health care decisions,” says Winslow. “We’re giving them new information and new insights.”

It will be several years before there are any actionable outcomes, says Sawyer. “We think of the HEART project as the first of many,” he adds.

Monitoring revolution

In Bar Harbor, Jackson Laboratory uses mice as a proxy for the study of human disease and drug development.

Vivek Kumar, an associate professor, leads a lab that combines the fields of genetics, neuroscience and computer science in order to develop better ways to understand observable outward traits in mice as they relate to their brain activity and genetic architecture.

“The animal can’t tell you how it’s feeling,” says Kumar. “So, science derives the emotional or cognitive state of the animal through a series of tests. We ask the animals to do certain tasks and we monitor their natural behaviors. We then link these behaviors to altered neural circuits and altered genetics.”

He cautions that “observing complex behaviors in animals is hard and traditionally humans have been the best behavior. This naturally limits scalability and reproducibility. ”

Kumar is working to revolutionize that monitoring.

“Instead of humans watching the animals, we have video cameras watching and we use machine learning, computer vision and AI methods to automate that task,” he says.

The technology records everything the animal does over long periods of time. That information is used to understand the animal’s neural function and the genetic changes that are happening.

For example, mice models are often used for the study of autism spectrum disorders. Through long-term monitoring and analysis of the data collected in these models, Kumar’s lab discovered changes in their fine motor movements — things like gait and postural deficits.

“Cognitive changes in these models, such as communication and social deficits, are challenging to measure at scale,” he says. “But the manifestation of physical movement changes provides a tool for what’s happening in brain activity and at the genetic level. It is also high-throughput and makes it possible to test the efficacy of candidate drug treatments.”

Ultimately, he says, the mouse can be used as a tool to discover better treatment through these AI approaches.

The project uses camera set-ups, with infrared illumination at night, to record mice interacting. The collected data is used to develop algorithms that go through every frame — and billions of data points from every part of each animal — to detect things like gait and posture, and things like how much an individual animal wobbles and the length of its stride. Patterns in the data help researchers understand more-complex internal cognitive states.

The majority of the work initially was funded within JAX and continues through agencies within the National Institutes of Health and the Simons Foundation, a private foundation that funds autism research.

The project is still in the realm of basic research. Ultimately, the goal is to translate the findings into treatments.

As an educator, Kumar also uses his lab to train pre- and post-doctoral students in machine learning and computer vision.

“We trained ourselves to do a lot of this work,” he says of his generation of researchers. “Now we have a good group of computation people at JAX. I feel like we’re helping in that education mission.”

Ambient listening

In January 2023, MaineHealth launched a pilot program using AI-enabled “ambient listening” technology that records the conversation during an office visit and then produces the clinical documentation for the patient’s medical record.

Early on, the project was identified as an important tool to address burnout.

“The app sits on your phone and records the conversation that you have with your patient, and the AI technology generates the clinical notes from that recording,” says Dr. Rebecca Hemphill, MaineHealth’s chief medical information officer.

The technology is able to process elements of the conversation — such as the physical exam, data review and discussion of the patient’s health care plan—as organized clinical notes.

“That conversation doesn’t necessarily happen sequentially over the course of your visit,” Hemphill says. “The AI is able to pull out what needs to be there and what needs to be in the right place. Sometimes the conversation strays and you talk about your vacation and your kids or the dog. The AI removes the kids and the dog and the vacation.”

Surveys conducted during the pilot found that patient/provider experience is improved, because the provider isn’t spending time typing notes. And it reduces the time providers spend completing the documentation.

However, AI-generated notes are not perfect and require provider review and edits.

“It can make mistakes, so you do have to review it and make corrections,” says Hemphill.

She continues, “But overall it still saves time. We get comments from our providers who are using this saying this has been life-changing.”

The technology is now being expanded to more providers.

“The hope, ultimately, is that anybody who wants to use it will be able to,” she says. “There’s a lot of interest.

Other projects in the works use AI to review patient portal messages and craft suggested responses that can be used and/or edited, and to evaluate and categorize messages that come in from patients and route them to the right department.

In general, says Hemphill, there’s excitement across the organization about AI’s potential for a variety of uses. At the same time, there’s caution. AI is only as good as the data set being used and can make mistakes.

Keeping patient health information secure is also key.

“It’s critically important that any tool is able to maintain security,” she says.

“As an organization, we’ve created an AI advisory group made up of leaders across the health system, and we’re trying to educate ourselves about AI — both the pitfalls and the benefits,” says Hemphill. “We’re trying to identify areas where AI can be safely and ethically and effectively leveraged to help in the care of our patients.”

0 Comments